The Future of Immersive Entertainment

The Future of Immersive Entertainment

By Eric Klassen, Executive Producer, CableLabs

The pandemic has accelerated the adoption of video communication technologies for interpersonal communications and entertainment at an unexpected rate. The idea of going to a screen in the home rather than doing something in-person has now been forced into day-to-day life for all generations and across all public sectors. This is a major shift in consumer behavior in an incredibly short amount of time.

When the threat of the pandemic is under control, most location-based experiences will revert to a normal routine: sports stadiums and airports will fill up, schools and office personnel will return, and concerts will have fans falling over each other in the front rows again. The difference, though, will be in the option of an alternative virtualized experience and the number of people that will opt for it. With ever-improving displays, compelling applications, and better network performance, many people may continue to work, learn, and play at home.

This consumer trend is not new. The great majority of football fans watch televised games even though going to the stadium is an available option: the high production value, amazing displays, rich sound, and avoidance of lines, crowds, and costly tickets makes staying home a compelling alternative. The pandemic has put movie theaters into a similar situation, and studios are being forced to re-visit how best to get new content to consumers without destroying the theater ecosystem in the process. For consumers to choose a private home experience over these location-based entertainment venues is a testament to how far media production, displays, and network technology has come over the last generation, and it’s only getting better.

Virtual Reality (VR) and Augmented Reality (AR) displays have come into market, and the experience of viewing an environment from a flexible viewpoint rather than one pre-determined camera position – known as “six degrees of freedom” or 6DoF – has a solid foothold mostly in the game industry. Although the applications outside of gaming are limited, developers are learning what works best, and gamers have become comfortable with spatial content and what can be done with it. A popular argument for 6DoF applications is that they’re a stepping-stone until better, more user-friendly spatial solutions find mass-consumer adoption.

Immersive displays using light field or holographic technology are no longer sci-fi and the ability to view volumetric spatial media, without a headset, is now available. Live action light field capture technology has been around for about a decade, but there’s never been a commercially available display technology that allows viewers to experience the changing parallax of the media without a headset. Up until now, the massive size of the media could barely run on local machines, and the idea of distributing it over the network was out of the question. Even if there was a display that could project the light field content, there was no way to get it to consumers unless petabyte-sized files could be downloaded and run locally. These limitations are on the verge of being overcome: early displays are out of the protype stage and showing promise, compression standards and network solutions are in development, and early use cases are paving a pathway to market.

However, some challenges are surfacing for seemingly simple productions.

Imagine how a simple subject of a talking-head newscaster is seen on a standard display. When a newscaster speaks to the audience, they look directly into the camera, and the audience sees the newscaster looking directly at them. This single television view gives the same image to a viewer anywhere in the room.

With holographic displays, this is not the case. A viewer on the right side of a couch will see one side of the newscaster, and a viewer on the left will see the other side. This might seem like a trivial difference at first, but in terms of production considerations, this raises a series of questions. How will viewers react to sitting off-center from the newscaster’s gaze? If there are issues with this viewing experience, what can be done about it? How should multiple viewers in the room be handled? Should a multi-view function be designed that can be turned on and off?

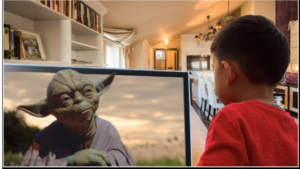

If viewing positions can be identified in the viewing area, a new level of immersion will become possible, especially for gaming experiences. Imagine a smart character that looks directly at one player to say something, then turns to look at another player to say something else to that player. Or, imagine a magic scroll that hides the message from one player but another player in the room can see it. Game techniques like this have been touched on with headsets, but with light field displays, a new level of immersion is introduced that creatives are very excited about.

If consumers embrace holographic displays for improved telepresence, a critical development will come from better eye-to-eye contact. Currently, video telepresence is limited to a single camera view that’s slightly offset from the screen. Today, complex image processing software is required to correct the offset eye-gaze, but light field capture would provide the needed data to engineer one-to-one, one-to-many, and many-to-many social gatherings with more natural eye contact.

As display, capture, and network technologies improve, the future is ripe for a holographic media ecosystem. What does a holographic experience in the home and office look like in ten years and beyond? Is there a holodeck in sight? The Immersive Digital Experiences Alliance (IDEA) was founded with leaders in this ecosystem to imagine what this future will be and to work towards realizing it.